Testing with Mocha and local files

With the CLI for Microsoft 365 we use Mocha for testing. Before getting active in Open Source I must admit that I wasn’t to aware of all testing frameworks. We applied unit tests in our projects, but never to the extend that we got to 100% code coverage. When doing my first PR on the CLI it turns out we had 100% code coverage, and the only way to get your PR approved was to include tests and stay on the 100% code coverage. Even more interesting is coming from a Microsoft only background working for Linux and OSX can make things even more complex. Working with different paths and the way the file system handles those. So when working on my first test case that mocks files I learned quite a lot. A big shout out to Waldek Mastykarz who helped me out in making sense of it all.

Mocha to work with local files

When writing the test cases there are a few scenario’s you want to test, things like success and failure when working with the files. In our case we wanted to download files from and endpoint. Those files then must be stored locally. Since the returned result from the API we are calling is a stream it makes sense to create a WriteStream and pipe our results to the newly created WriteStream. The following snippet of code is used to store the file locally.

if (args.options.asFile && args.options.path) {

request

.get<any>(requestOptions)

.then((file: any): Promise<string> => {

return new Promise((resolve, reject) => {

const writer = fs.createWriteStream(args.options.path as string);

file.data.pipe(writer);

writer.on('error', err => {

reject(err);

});

writer.on('close', () => {

resolve(args.options.path as string);

});

});

})

.then((file: string): void => {

if (this.verbose) {

logger.logToStderr(`File saved to path ${file}`);

}

cb();

}, (err: any): void => this.handleRejectedODataJsonPromise(err, logger, cb));

}

Now in the case of the CLI we want to make sure we have 100% code coverage. That does mean we need to write tests for all scenario’s both the success and the error, and since we are working with promises we also must catch rejected promises. And since our tests are also running on different platforms, we must make sure they are working both on Windows as well as POSIX. That might introduce some issues when working with paths, as described in Confusing paths in Windows vs POSIX. The final important check is that we do not want to create actual files when writing tests; we want to keep everything in memory and not store anything locally as we do not know if we have access to our filesystem when running the tests and we most certainly do not want to write additional logic to clean up after a test. So, in order to write tests, we need to make sure to mock the way we work with files.

The first thing we need is a mocked response, something we can process further. Second we need to mock the response stream we would expect in code. To do that we use the PassThrough class that passes the input bytes as output. That way you can write the mock data to the response. Once there is a response we can create a write stream using the same PassThrough and use sinon.stub to stub our functions. By using const fsStub = sinon.stub(fs, 'createWriteStream').returns(writeStream as any); you can fake the creation of the stream and return a result. Now all that is left is the firing of closing or error events. You can use writeStream.emit('close'); or writeStream.emit('error'); to achieve that. When working with streams you will need to assume that it takes some time to process large sets of data. Now our mock response is so small it fires instantly, thus a short timeout should be implement. Using the setTimeOut we can fake that it actually takes some time to get the data and store it locally.

Translating the above into pseudo code:

- create a mocked response you want to return;

- create a response stream we can work with;

- create a write stream we can work with;

- stub the write stream to return data;

- stub the firing of events we want to validate;

- validate the actual response and pass or fail the desired test;

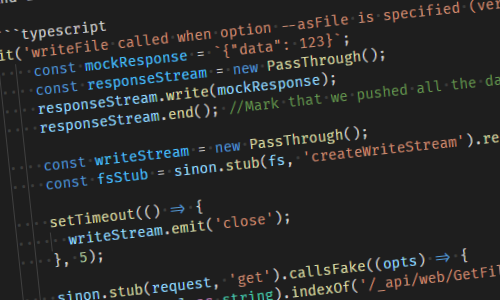

And inturn that would result in the following code:

it('writeFile called when option --asFile is specified (verbose)', (done) => {

const mockResponse = `{"data": 123}`;

const responseStream = new PassThrough();

responseStream.write(mockResponse);

responseStream.end(); //Mark that we pushed all the data.

const writeStream = new PassThrough();

const fsStub = sinon.stub(fs, 'createWriteStream').returns(writeStream as any);

setTimeout(() => {

writeStream.emit('close');

}, 5);

sinon.stub(request, 'get').callsFake((opts) => {

if ((opts.url as string).indexOf('/_api/web/GetFileById(') > -1) {

return Promise.resolve({

data: responseStream

});

}

return Promise.reject('Invalid request');

});

const options = {

verbose: true,

id: 'b2307a39-e878-458b-bc90-03bc578531d6',

webUrl: 'https://contoso.sharepoint.com/sites/project-x',

asFile: true,

path: 'test1.docx',

fileName: 'Test1.docx'

};

command.action(logger, { options: options } as any, (err?: any) => {

try {

assert(fsStub.calledOnce);

assert.strictEqual(err, undefined);

done();

}

catch (e) {

done(e);

}

finally {

Utils.restore([

fs.createWriteStream

]);

}

});

it('fails when empty file is created file with --asFile is specified', (done) => {

const mockResponse = `{"data": 123}`;

const responseStream = new PassThrough();

responseStream.write(mockResponse);

responseStream.end(); //Mark that we pushed all the data.

const writeStream = new PassThrough();

const fsStub = sinon.stub(fs, 'createWriteStream').returns(writeStream as any);

setTimeout(() => {

writeStream.emit('error', "Writestream throws error");

}, 5);

sinon.stub(request, 'get').callsFake((opts) => {

if ((opts.url as string).indexOf('/_api/web/GetFileById(') > -1) {

return Promise.resolve({

data: responseStream

});

}

return Promise.reject('Invalid request');

});

const options = {

debug: false,

id: 'b2307a39-e878-458b-bc90-03bc578531d6',

webUrl: 'https://contoso.sharepoint.com/sites/project-x',

asFile: true,

path: 'test1.docx',

fileName: 'Test1.docx'

};

command.action(logger, { options: options } as any, (err?: any) => {

try {

assert(fsStub.calledOnce);

assert.strictEqual(JSON.stringify(err), JSON.stringify(new CommandError('Writestream throws error')));

done();

}

catch (e) {

done(e);

}

finally {

Utils.restore([

fs.createWriteStream

]);

}

});

});

You can find the some more details in the initial CLI for Microsoft 365 commit. It was a fun learning opportunity to work with (atleast for me) more complex testing scenario’s.